BodyBeat: a Mobile System for Sensing Non-Speech Body Sounds

In this paper, we propose BodyBeat, a novel mobile sensing system for capturing and recognizing a diverse range of non-speech body sounds in real-life scenarios. Non-speech body sounds, such as sounds of food intake, breath, laughter, and cough contain invaluable information about our dietary behavior, respiratory physiology, and affect.

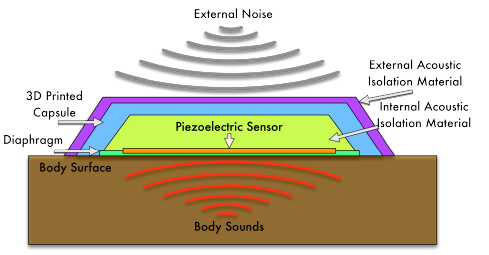

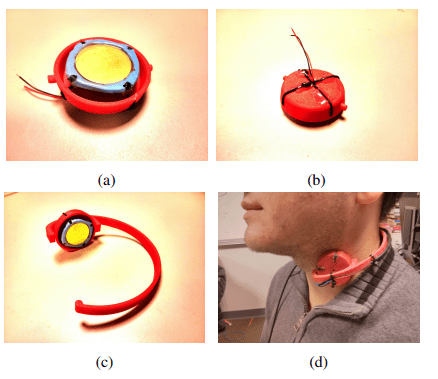

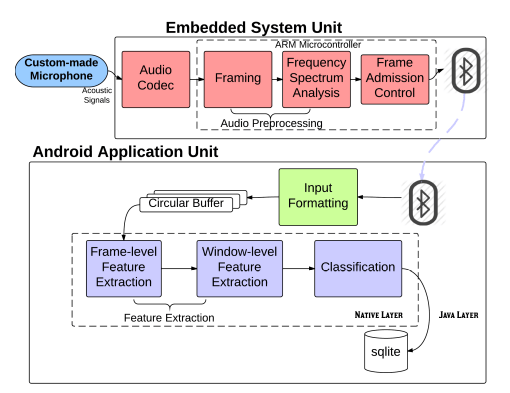

The BodyBeat mobile sensing system consists of a custom-built piezoelectric microphone and a distributed computational framework that utilizes an ARM microcontroller and an Android smartphone. The custom-built microphone is designed to capture subtle body vibrations directly from the body surface without being perturbed by external sounds. The microphone is attached to a 3D printed neckpiece with a suspension mechanism. The ARM embedded system and the Android smartphone process the acoustic signal from the microphone and identify non-speech body sounds.

We have extensively evaluated the BodyBeat mobile sensing system. Our results show that BodyBeat outperforms other existing solutions in capturing and recognizing different types of important non-speech body sounds.

Created by:

Tauhidur Rahman, Alexander T Adams, Mi Zhang, Erin Cherry, Bobby Zhou, Huaishu Peng, Tanzeem Choudhury

Publications:

Figures: